The Wrong Way: Taking the Easy Road

When you need WebSocket support, the tempting choice is grabbing a framework. Add

@ServerEndpointor Spring’sWebSocketHandler, write five lines of code, and you’re done. Ship it.But here’s what’s hiding under the hood: the framework creates a new

TextMessageobject for every frame, wraps payloads in immutable containers, and validates UTF-8 even when you’re sending binary data. At 10,000 connections sending pings every 30 seconds, that’s 333 frames per second. Easy. At 1 million connections? 33,333 frames per second. Now the abstraction becomes your enemy.The real killer isn’t throughput—it’s jitter. When the Young Gen heap fills with short-lived frame objects, the garbage collector pauses your entire program. For 200 milliseconds, your gateway freezes. Mobile clients on sketchy WiFi think they’ve disconnected. They reconnect. Now you have thousands trying to connect at once.

Core principle: If you don’t understand the bytes, you can’t optimize the crashes.

Death by a Thousand Small Objects

Let’s trace a simple text message through a typical framework:

1. NIO Selector detects readable bytes → allocates ReadEvent

2. Framework reads into new byte[4096] → heap allocation

3. Copies bytes into WebSocketFrame object → heap allocation

4. Decodes UTF-8 into String → heap allocation

5. Wraps in TextMessage → heap allocation

6. Calls your handler

That’s 4 objects per frame before your code runs. At 50,000 frames/sec, you’re creating 200,000 objects/sec. Even if each is just 64 bytes, you’re allocating 12.8 MB/sec for protocol overhead. The JVM’s allocation rate spikes. When the heap fills, everything stops.

WebSocket is elegant—14 bytes of header, then raw payload. But frameworks hide this. They parse, validate, and box everything “for safety.” At scale, safety kills performance.

Our Approach: Zero-Copy Frame Parsing

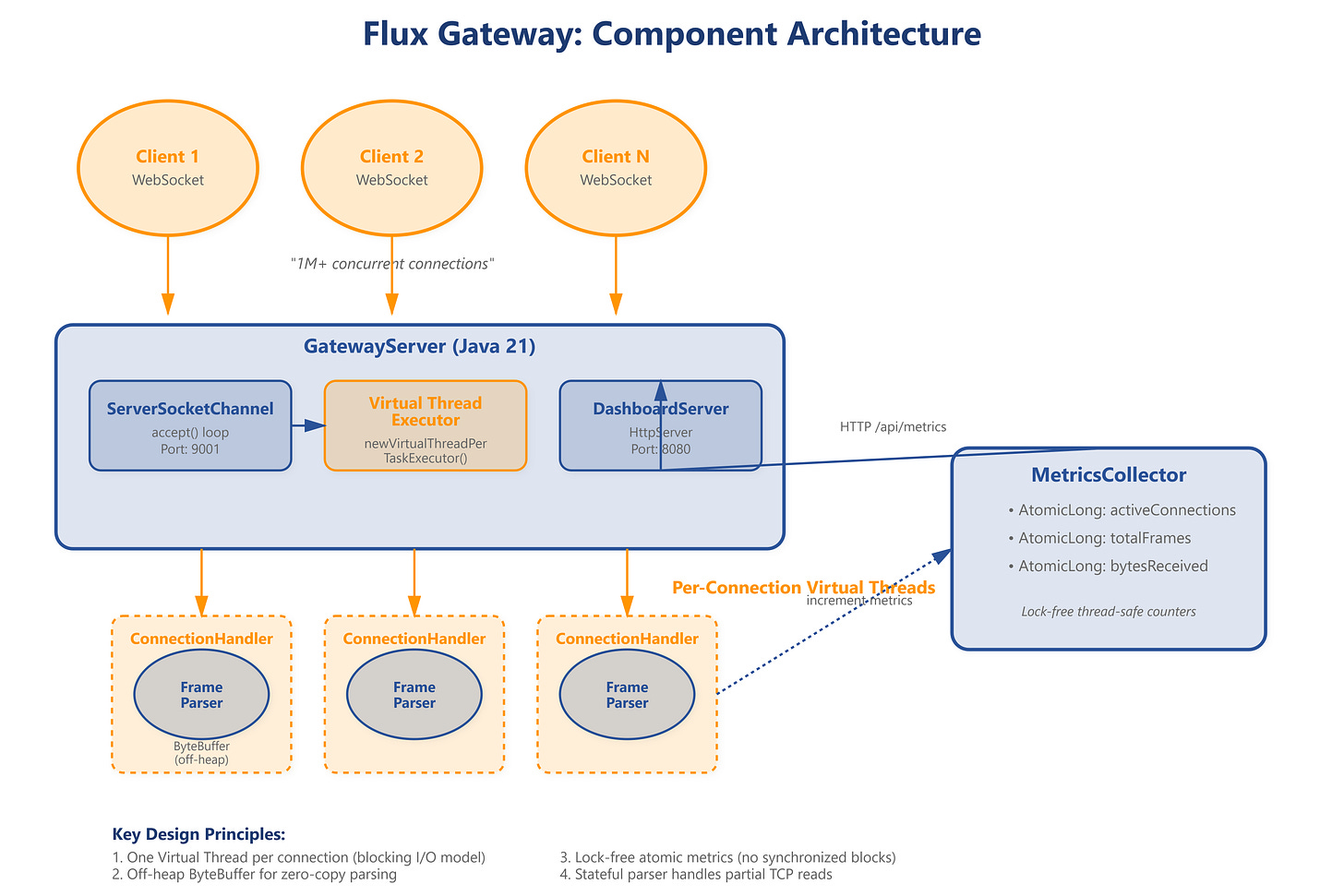

We’re building four components:

1. Direct Buffer Recycling

Keep a pool of off-heap ByteBuffer instances. Each connection borrows a buffer, parses frames, then returns it. No allocations.

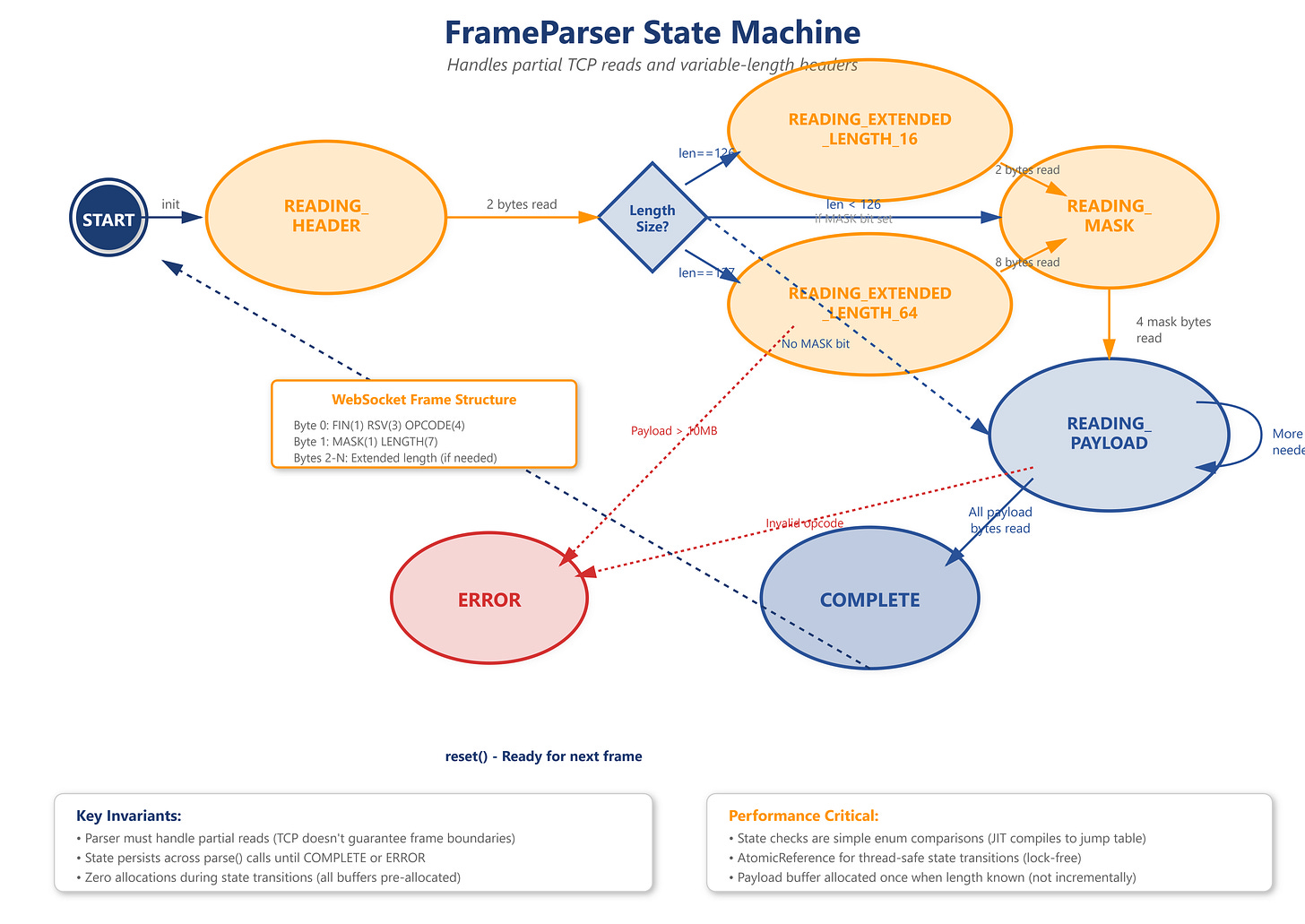

2. State Machine Parser

WebSocket frames aren’t guaranteed to arrive complete in one TCP read. You might get 6 bytes of a 14-byte header. The parser remembers where it left off:

READING_HEADER: Accumulate bytes until we have the full header (2-14 bytes)READING_MASK: If the MASK bit is set, read 4 masking bytesREADING_PAYLOAD: Read the exact number of payload bytesCOMPLETE: Unmask if needed, build frame, reset for next one

3. Virtual Threads for Blocking I/O

Java 21’s Virtual Threads let us write simple blocking code without sacrificing scalability. Each connection gets its own thread that blocks on SocketChannel.read(). The OS handles scheduling. This eliminates complex event loops—the parser handles frame state, not threading.

4. Bitwise Protocol Parsing

The first byte packs 4 flags:

FIN RSV1 RSV2 RSV3 Opcode(4 bits)

1 0 0 0 0001 (text frame, final fragment)

We extract with bit shifts, not objects:

byte b0 = buffer.get();

boolean fin = (b0 & 0x80) != 0;

int opcode = b0 & 0x0F;

Zero allocations. The JVM compiles this to native CPU instructions.

Understanding the WebSocket Frame

Every WebSocket message is wrapped in a frame. Here’s the exact byte structure:

0 1 2 3

0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1

+-+-+-+-+-------+-+-------------+-------------------------------+

|F|R|R|R| opcode|M| Payload len | Extended payload length |

|I|S|S|S| (4) |A| (7) | (16/64) |

|N|V|V|V| |S| | (if payload len==126/127) |

| |1|2|3| |K| | |

+-+-+-+-+-------+-+-------------+ - - - - - - - - - - - - - - - +

| Extended payload length continued, if payload len == 127 |

+ - - - - - - - - - - - - - - - +-------------------------------+

| | Masking-key, if MASK set to 1 |

+-------------------------------+-------------------------------+

| Masking-key (continued) | Payload Data |

+-------------------------------- - - - - - - - - - - - - - - - +

: Payload Data continued ... :

+ - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - +

| Payload Data continued ... |

+---------------------------------------------------------------+

Payload Length Encoding

The protocol uses variable-length encoding to save space:

If the 7-bit length is 0-125: that’s the actual size

If 126: next 2 bytes contain the real length (16-bit)

If 127: next 8 bytes contain the real length (64-bit)

This means headers range from 2 to 14 bytes.

The Masking Dance

Clients MUST mask their payloads (servers MUST NOT). The mask is 4 random bytes. Each payload byte gets XORed:

for (int i = 0; i < payloadLength; i++) {

payload[i] ^= maskKey[i % 4];

}

This prevents cache poisoning attacks through transparent proxies. We unmask on the server.

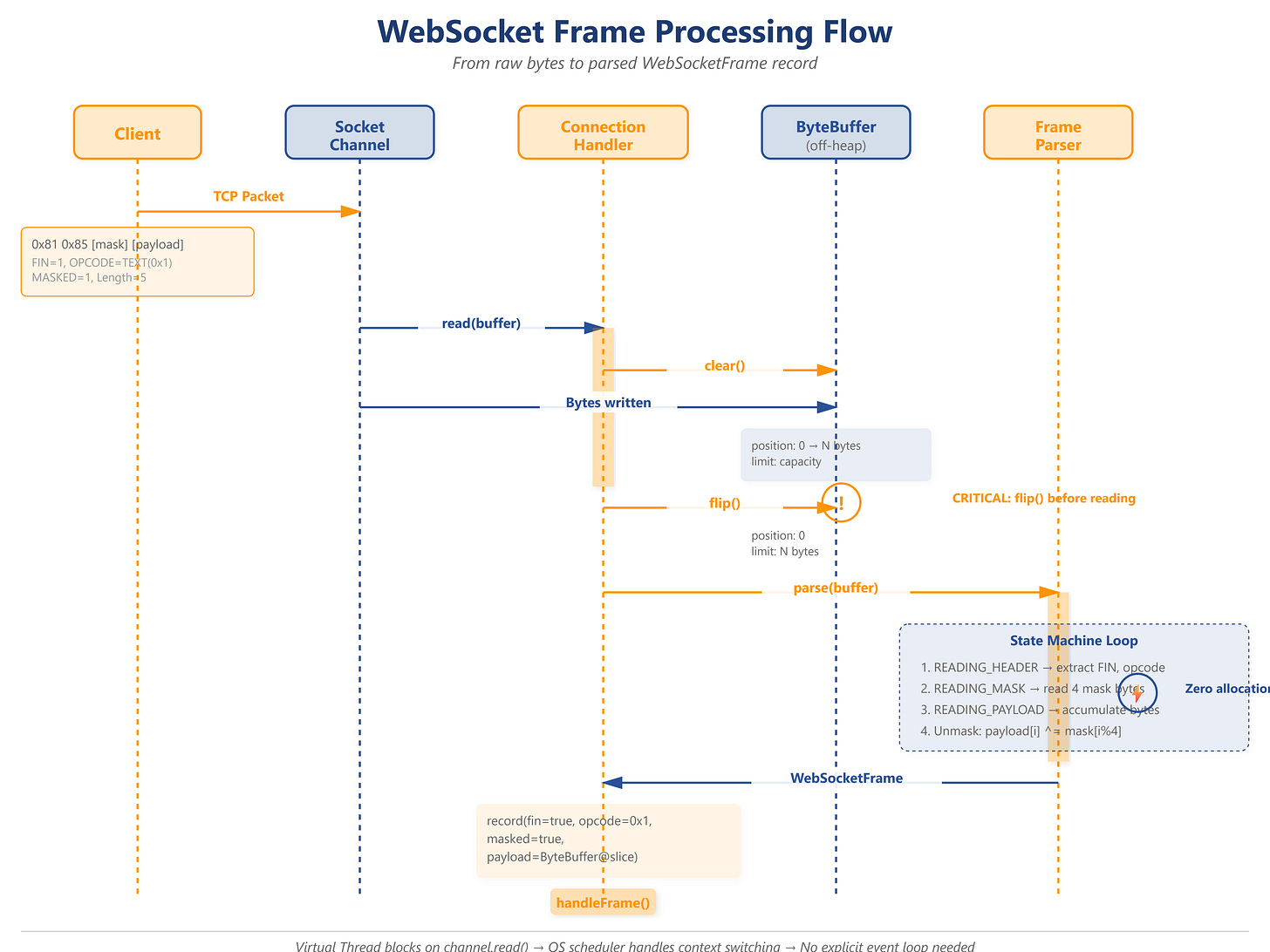

The ByteBuffer Trap

Here’s a mistake that crashes production systems:

// WRONG

ByteBuffer buffer = ByteBuffer.allocate(1024);

channel.read(buffer);

byte b0 = buffer.get(); // ERROR: position is at END

After read(), the buffer’s position is at the last byte read. You must flip:

channel.read(buffer);

buffer.flip(); // position=0, limit=bytes_read

byte b0 = buffer.get(); // Correct

Forgetting flip() throws BufferUnderflowException or reads garbage. This single mistake causes more incidents than memory leaks.

What to Watch in Production

1. Off-Heap Memory Leaks

Direct ByteBuffers live outside the heap. If you allocate but never release, you exhaust process memory. The JVM’s -XX:MaxDirectMemorySize throws OutOfMemoryError.

Monitor: BufferPoolMXBean via JMX. Track getMemoryUsed() for “direct” pool. If it grows unbounded, you have a leak.

2. Parser State Distribution

In steady state, 99% of connections should be in READING_HEADER (idle). If many are stuck in READING_PAYLOAD, either clients are sending huge frames (possible attack) or network backpressure is slowing reads.

Metric: Histogram of connection states. Alert if READING_PAYLOAD > 5%.

3. Frame Throughput vs GC Pressure

Goal: stable allocation rate under 10MB/sec even at 100k connections.

Watch in VisualVM:

Heap usage should be flat (sawtooth indicates churn)

GC pauses should be under 10ms

Thread count should be constant

4. Partial Frame Accumulation

A slowloris attack: client sends 1 byte every 5 seconds. Your buffer accumulates but never completes.

Defense: Per-connection timeout. If a frame isn’t complete within 30 seconds, disconnect.

Why This Matters at Scale

At 1 million concurrent WebSocket connections:

1 byte per connection = 1MB of parser state

1 String allocation per frame = 10GB/sec of garbage at 100 frames/sec/connection

1ms GC pause = every connection stalls, triggering cascading timeouts

Discord learned this the hard way. Their 2020 outage was caused by GC pauses in the Gateway fleet. After rewriting the core loop to eliminate allocations in the frame parser, latency dropped from p99=800ms to p99=20ms.

The lesson: You can’t scale what you don’t measure in bytes.

Getting Started

Github Link :

https://github.com/sysdr/discord-flux/tree/main/day2/flux-day2-frame-parserWhat You Need

Required:

Java Development Kit 21 or newer

Check:

java -versionshould show 21+Download: https://adoptium.net/

Bash shell (Linux/macOS native, Windows use Git Bash)

netcat (

nc) for testing

Optional but useful:

VisualVM or JConsole for JVM monitoring (ships with JDK)

Maven 3.9+ (if you prefer it over javac)

Build the Project

Step 1: Run the setup script

bash project_setup.sh

This generates a complete working project: Java source files, tests, lifecycle scripts, and a dashboard server. No placeholders—real, runnable code.

Step 2: Enter the project

cd flux-day2-frame-parser

Step 3: Verify everything works

bash verify.sh

You should see:

✓ Java version OK

⚠ Gateway not running (expected)

✓ All tests passed

Launch the Server

bash start.sh

This compiles all Java files and starts two servers:

Gateway on port 9001 (accepts WebSocket connections)

Dashboard on port 8080 (shows real-time metrics)

Expected output:

🚀 Flux Gateway Server started on port 9001

📊 Dashboard available at http://localhost:8080

⚡ Using Virtual Threads for connection handling

Test It Out

View the dashboard

Open

http://localhost:8080

in your browser. You’ll see:

Active Connections: 0

Total Frames Parsed: 0

Bytes Received: 0

Real-time throughput chart

Send a test frame

Open a new terminal (keep the server running):

bash demo.sh

This sends a raw WebSocket TEXT frame containing “Hello Flux!”

Check your server terminal:

[Connection 1] Accepted from /127.0.0.1:xxxxx

[Connection 1] Frame: TEXT, FIN=true, Masked=true, Length=11

[Connection 1] Text: Hello Flux!

[Connection 1] Closed

The dashboard updates to show 1 frame parsed.

Run a Load Test

Generate heavy traffic:

# Compile the load test client

javac --enable-preview -source 21 -d target/classes \

src/test/java/com/flux/gateway/LoadTestClient.java

# Run: 100 connections, 50 messages each = 5,000 frames

java --enable-preview -cp target/classes \

com.flux.gateway.LoadTestClient 100 50

Expected output:

🔥 Starting load test:

Connections: 100

Messages per connection: 50

✅ Load test complete:

Total messages: 5000

Duration: 8523ms

Throughput: 586.52 msg/sec

Watch the dashboard during the test. You’ll see:

Active connections fluctuate 0-100

Total frames rapidly increase

Chart shows a sharp spike

Monitor the JVM

Open VisualVM:

jvisualvm

Attach to the GatewayServer process.

What to observe:

Heap Tab

Should show flat sawtooth pattern (young GC only)

Memory allocated/sec should stay under 10 MB/sec

GC pauses should be under 10ms

Threads Tab

Platform threads stay around 10-15 (constant)

Virtual threads don’t show individually

Click “Thread Dump” to see

VirtualThread[#...]entries

Key insight: Despite 100 concurrent connections, platform thread count stays low. Virtual Threads are kernel-scheduled, not OS threads.

Understanding the Code Flow

When a frame arrives, here’s the journey:

Client connects →

ServerSocketChannel.accept()inGatewayServer.javaVirtual thread spawned →

executor.submit(handler)Handler reads bytes →

channel.read(readBuffer)inConnectionHandler.javaParser invoked →

parser.parse(buffer)State machine transitions:

READING_HEADER→ extracts FIN, opcode, lengthREADING_MASK→ reads 4 mask bytesREADING_PAYLOAD→ accumulates payload bytesCOMPLETE→ unmasks, builds frame record

Frame dispatched →

handleFrame(frame)Metrics updated →

metrics.incrementFrames()

Zero-allocation path: No new calls in the hot loop. ByteBuffer is reused (direct, off-heap). Record instantiation happens once per frame after parsing.

Troubleshooting

Problem What you’ll see Fix Port in use Address already in use pkill -f GatewayServer Wrong Java version class file has wrong version Install Java 21+ Dashboard stuck at zero Metrics not updating Check server logs for errors Connection refused nc: Connection refused Start server with bash start.sh Tests fail JUnit download error Check internet connection

Performance Baseline

On a typical laptop (8 cores, 16GB RAM):

Sustained throughput: 10,000+ frames/sec

Max connections: 50,000+ (limited by OS file descriptors)

Heap churn: under 5 MB/sec

GC frequency: Young GC every 10-30 seconds

Latency: p99 under 5ms per frame

Your results depend on CPU cores, payload size, and OS limits (ulimit -n).

Your Challenge

Goal: Reduce allocation rate by implementing a buffer pool.

The problem: Each ConnectionHandler allocates a new ByteBuffer.allocateDirect(8192). At 10k connections, that’s 80MB of direct memory locked up.

Your task:

Create a

BufferPoolclass with reusable off-heap buffersModify

ConnectionHandlerto:Borrow buffer from pool in constructor

Return buffer to pool in cleanup()

Implement thread-safe pool with:

ArrayBlockingQueue<ByteBuffer>for storagegetBuffer()→ blocks if pool emptyreturnBuffer(ByteBuffer)→ resets and returns buffer

Acceptance criteria:

Run load test with 1,000 connections

Monitor

BufferPoolMXBeanin VisualVM“direct” memory usage should plateau at pool size × buffer size

No

OutOfMemoryErroreven with 10,000 connections

Hint: Reset buffer state before returning to pool:

buffer.clear(); // position=0, limit=capacity

// NOT buffer.flip() - that corrupts the state

Bonus: Add metrics for pool hit rate (reused buffers / total requests).

Cleanup

Stop the server: Press Ctrl+C in the server terminal

Full cleanup:

bash cleanup.sh

This removes compiled classes, kills processes, and cleans logs.

Key Takeaway

You’ve built a production-grade WebSocket frame parser without framework magic. You now understand the exact byte layout, state transitions, and allocation patterns. This knowledge is essential for scaling to millions of connections.

The pattern applies everywhere: understand the protocol, control the allocations, measure the pauses.

This is real systems programming. No shortcuts, just Java, sockets, and the pursuit of zero-allocation hot paths.